A Hundred-Year-Old Pedagogical Theory That Elevates AI as a Thinking Partner

| llm | prompting | learning | Time to read: 7 minuter (6493 tecken)

“Explain it so a ten-year-old would understand!” A hundred-year-old pedagogical model combined with a theory about language models has helped me think about how to best use ChatGPT, Claude, and Gemini as sounding boards and for learning new things – without losing the ability to “fly by the seat of my pants.”

Mind the gap – both in the London Underground and when using language models. Photo: Claudio Schwarz, on Unsplash.

My wife Anna is a preschool teacher. She has often described how one of her primary tasks is to meet the children just beyond what they can do and manage on their own, to guide them toward new abilities and knowledge.

This pedagogical approach is based on the theory of the zone of proximal development, formulated by Soviet psychologist Lev Vygotsky in the early 1900s. He defined it as "the difference between what an individual can do without help and what they can achieve with help and support."

One of the latest episodes of the New York Times tech podcast Hard Fork, A.I. School Is in Session: Two Takes on the Future of Education, connected what Anna had told me about Vygotsky with my use of generative AI.

In the podcast, several students describe how they use AI in their studies. One of them describes a situation that I think many who have studied at college or university can relate to: Sitting in a large lecture hall and not following at all what the professor is saying at the whiteboard. The gap between what you know and what is being said is too large, because the lecturer has not met the audience in their zone of proximal development.

When the Mentor Is No Longer Human #

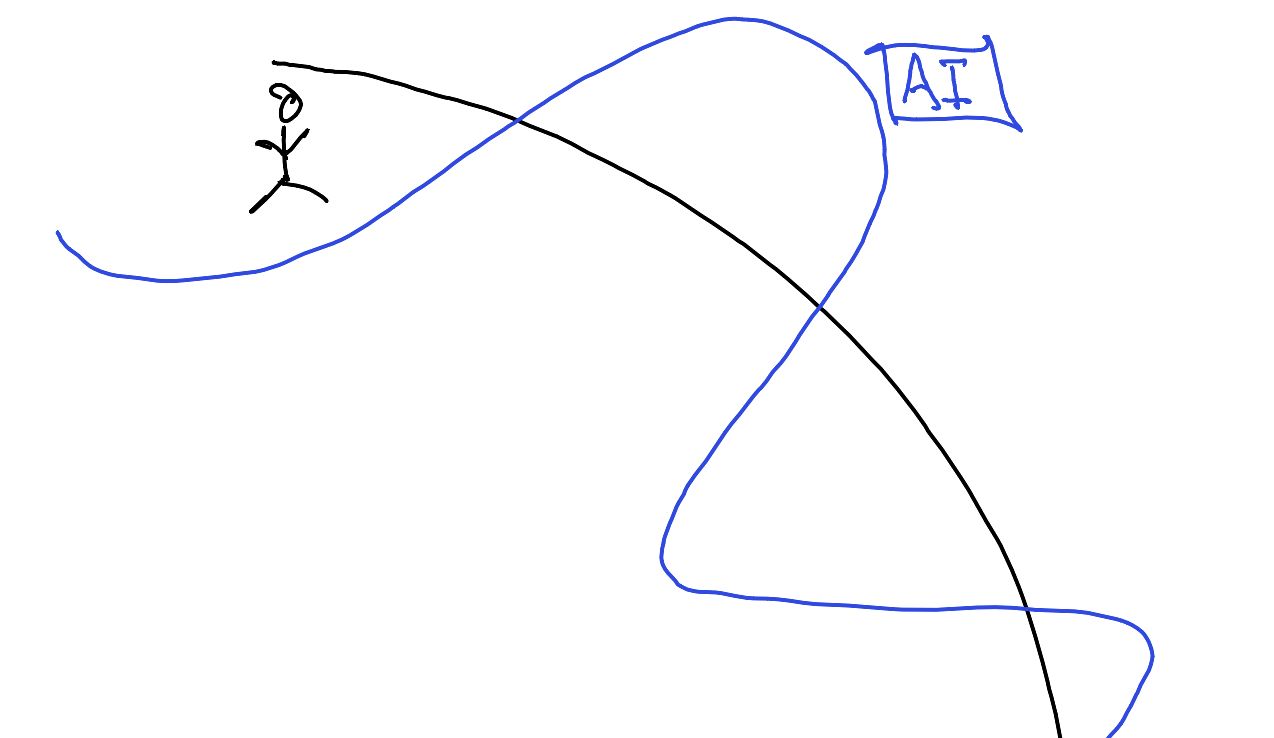

Ethan Mollick is a professor at the Wharton School at the University of Pennsylvania. On his blog and in the book Co-Intelligence, he has described what he sees as generative AI's "jagged frontier." This jagged edge is Mollick's way of describing how large language models sometimes surprise us as incredibly competent and sometimes astonishingly poor. They can write fantastic poetry but cannot count syllables.

Human ability illustrated by the black line, artificial intelligence's by the blue.

It's possible to bring together Mollick's thoughts about AI's jagged edge with Vygotsky's zone of proximal development, a fusion that means it is no longer a human mentor guiding but a large language model.

But unlike a human educator or mentor who can meet me where I currently am, large language models have no agency of their own, especially when we use them in general chatbots like ChatGPT and Claude. Built into more tailored solutions they may have received clear instructions, but in an open conversation they will respond in the way I ask them to. If I prompt too imprecisely and sparsely, there's a high risk that the answer will skip all the learning steps along the way and go straight to a description that is much more complex than I'm able to absorb.

Instead, I need to make it clear in my instructions that I want to be "met" just outside where my current knowledge ends, in my zone of proximal development, to step by step approach a more complete and complex understanding.

But this is complicated by the fact that the language model doesn't know where the boundary of my understanding lies and that I as a user cannot easily determine where the model has its limitations.

Vygotsky's theory describes the ideal — stepwise learning just beyond my current ability. Mollick's theory describes the challenge when I use language models to learn new things — they don't know what I already know.

Flying by the Seat of Your Pants #

AI-Generated "Workslop" Is Destroying Productivity reads the headline of a Harvard Business Review article that has been widely shared in my feeds and describes this challenge, among others. The text argues that one should strive to be a pilot and not a passenger in one's use of generative AI:

Pilots are much more likely to use AI to enhance their own creativity, for example, than passengers. Passengers, in turn, are much more likely to use AI in order to avoid doing work than pilots. Pilots use AI purposefully to achieve their goals.

The comparison between pilots and passengers reminded me of an article I wrote in Forskning och Framsteg in November 2011. It deals with the risks of too much automation, using aviation as an example: If you're going to become a good pilot who can make difficult decisions under time pressure in unexpected situations, you can't have completely relied on autopilot up to that point. "There's an old classic pilot expression that says you should learn to 'fly by the seat of your pants,'" says one of the people quoted in the article. It's about having wrestled with the technology for many, many hours to have felt in your seat how the plane behaves in different situations. Only then do you get enough intuitive feel for the underlying technology to be able to take over if automation fails.

Over the years, I've referenced that text in many interviews, using those arguments to discuss what happens in various industries as the degree of automation increases. How do we ensure that crucial knowledge isn't lost over time because too few people can perform the tasks?

With the breakthrough of generative AI, this question is relevant even on an individual level. How do I ensure that I use language models in a way that doesn't automate away my opportunities to learn what I need to know?

Small Steps Toward New Knowledge #

As users of large language models, we benefit from relating to both Vygotsky's and Mollick's theories. We need to understand both in which areas they are actually better than us, and how we best utilize the models when we want them to help us in our learning. Translated to the use of a language model and Mollick's idea of a jagged edge, the zone of proximal development exists in the space between what I can do myself and the knowledge represented in the model.

In the Hard Fork episode, the student continues with a reasoning that is a good description of how I've experienced the models helping me build knowledge and understanding in various subject areas I venture into:

I found AI to be really helpful for connecting the dots between different moments of clarity that you might have gotten when you were sitting in lecture or were focused and then unfocused one time. So you use AI to fill in the gaps between when you lost focus or lost understanding.

The challenge is that my zone of proximal development doesn't consist of the entire space between what I know and what the model knows, but only the part that lies just outside my own ability. Therefore, it's important to try to prompt the model so that it successively helps me move my understanding forward, instead of directly giving the correct answer.

My experience is that large knowledge gaps require more careful prompting for the model to better "understand" at what level it should operate to best help me. "Explain X so a 10-year-old would understand" is a common example of how to get language models to simplify complex concepts, but that prompt is too sweeping to work well.

So Bring Vygotsky into the System Prompt! #

This is where thoughts and theories about zones of proximal development, jagged edges, and flying by the seat of your pants merge, on both individual and system levels: How do we find ways to utilize language models to move forward without simultaneously undermining our own understanding and thus also our ability to develop?

A practical approach for me has been a new addition to my most used system prompt, the one that instructs language models to act as a sounding board. It has for some time contained the following instruction:

Meet me where I am (Vygotsky's theory of zone of proximal development): Gauge my understanding from what I write. If unclear, ask one question about my current knowledge level, then proceed.

But a follow-up question remains: How do I know when I've ended up wrong in relation to the jagged edge, too far from my own ability and too close to the model's? Is it possible to capture that as well in an instruction in the prompt?